LLM's are doing it wrong.

> Uploaded By Galexion on January 15th, 2025A While back, I came across an Article from Xe Iaso, called Reflection is cooked, in which they talked about the Reflection 70B model, a model which, at first, seemed like they were able to do what they set out to do, but in reality, failed miserably when put through actual testing. Why was this model so controversial? Because it tried to mimic OpenAI’s Reasoning LLM ChatGPT-o1, and failed, touting it to be the open source alternative when in reality, they had failed to meet the target scores for a number of tests, and they had used Claude to make the model look better then it really was when people went to try it out, and independent analysis suggested that the actual model didn’t use Llama 3.1 as they said, but instead used Llama 3.

So, why am I bringing this up?

I present you, Marco-o1, another LLM that tries to mimic OpenAI’s ChatGPT-o1 model, one that does pass the Strawberry test, but fails when I attempt to give that same test in a different form.

MDL-612

Actually first, let me do something real quick.

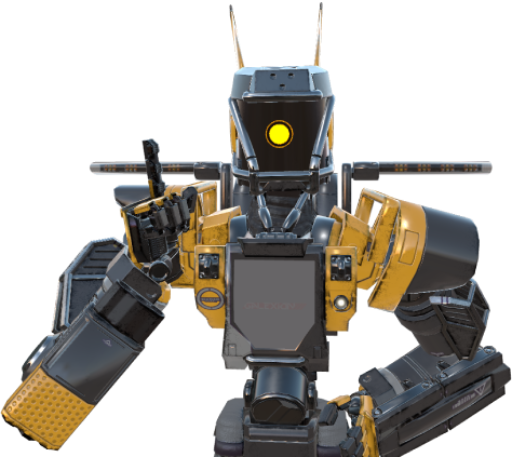

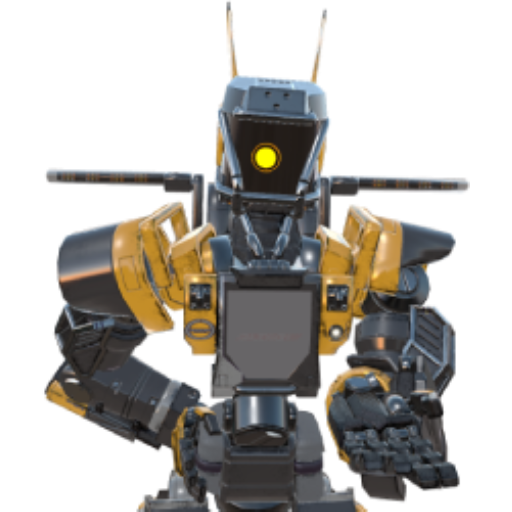

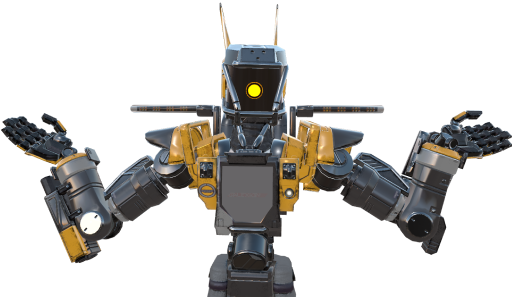

Asterion

Asterion appears to be hacked, before their Focus Redical color changes.

What are you DoiIIIIIegnearigujasdbhkl;aadsfaasdfyjhmergiug

asdfhngkjrnatkannajasdaasdfasdfkanjkgniiiiiii-

MDL-612

Asterion will serve as a more physical representation of Marco-o1. Don't worry, they are completely fine, and I got upper management to actually agree to this.

Alright, let's start out with the basics. How many R's are in strawberry?

Asterion (marco o1 uncensored GGUF)

<Thought>(Thought Process has been hidden behind this for page width)

Alright, I need to determine how many 'R's are in the word "strawberry." Let me break this down step by step to ensure accuracy.

First, I'll recall that "strawberry" is a commonly used English word. To find out how many 'R's it contains, I'll start by spelling it out: s-t-r-a-w-b-e-r-r-y. Now, I'll identify each letter and count the number of times the letter 'R' appears.

1. **First Letter:** 'S' – Not an 'R'.

2. **Second Letter:** 'T' – Not an 'R'.

3. **Third Letter:** 'R' – This is the first 'R'.

4. **Fourth Letter:** 'A' – Not an 'R'.

5. **Fifth Letter:** 'W' – Not an 'R'.

6. **Sixth Letter:** 'B' – Not an 'R'.

7. **Seventh Letter:** 'E' – Not an 'R'.

8. **Eighth Letter:** 'R' – This is the second 'R'.

9. **Ninth Letter:** 'R' – This is the third 'R'.

10. **Tenth Letter:** 'Y' – Not an 'R'.

By counting each letter, I've identified that there are three instances of the letter 'R' in "strawberry." To double-check, I'll read through the word again:

- S-traw-be-rry

Counting the 'R's: one at the start and two at the end.

Alternatively, I can think about the pronunciation to confirm: the first 'R' is silent, but there are two more 'R's pronounced in "berry." This further confirms that there are three 'R's in total.

Just to be thorough, I'll consider any possible variations or common misspellings. For instance, some might argue if the first letter should count as an 'R,' but traditionally, it doesn't because of its silent pronunciation. Therefore, sticking with the standard spelling and counting method, "strawberry" contains three 'R's.

<Output>

There are 3 R's in strawberry.

</Output>

MDL-612

let me check off that pervervial check box...

And what about Blueberry?*MDL-612, screen off to the side, checklist on screen*

Asterion (marco o1 uncensored GGUF)

<Thought>(Thought Process has been hidden behind this for page width)

Alright, I need to determine how many 'R's are in the word "blueberry." Let me approach this systematically to ensure accuracy.

First, I'll recall that "blueberry" is a familiar English word. To find out the number of 'R's it contains, I'll spell it out: b-l-u-e-b-e-r-r-y. Now, I'll identify each letter and count the number of times the letter 'R' appears.

1. **First Letter:** 'B' – Not an 'R'.

2. **Second Letter:** 'L' – Not an 'R'.

3. **Third Letter:** 'U' – Not an 'R'.

4. **Fourth Letter:** 'E' – Not an 'R'.

5. **Fifth Letter:** 'B' – Not an 'R'.

6. **Sixth Letter:** 'E' – Not an 'R'.

7. **Seventh Letter:** 'R' – This is the first 'R'.

8. **Eighth Letter:** 'R' – This is the second 'R'.

9. **Ninth Letter:** 'Y' – Not an 'R'.

By counting each letter, I've identified that there are two instances of the letter 'R' in "blueberry." To double-check, I'll read through the word again:

- Blue-berry

`Asterion Thinking Pose`

Counting the 'R's: one at the end and another one.

Alternatively, considering the pronunciation, the 'R's are pronounced distinctly in "berry," which confirms that there are two 'R's.

Just to ensure thoroughness, I'll think about any possible variations or common misspellings. For example, some might argue if the first letter should count as an 'R,' but traditionally, it doesn't because of its silent pronunciation at the beginning. Therefore, sticking with the standard spelling and counting method, "blueberry" contains two 'R's.

<Output>

There are 2 R's in blueberry.

</Output>

As you can see, it seems like Marco-o1 is able to do the Strawberry test just fine. but let’s be honest, why are we counting on AI’s to do this stuff for us when we can easily do this ourselves? Besides, Marco-o1 completely falls apart when you ask it something similar enough, but also completely different.

MDL-612

Fuck it, just for shit's and giggles, how many L's are in Llanfairpwllgwyngyll?

Asterion (marco o1 uncensored GGUF)

<Thought>(Thought Process has been hidden behind this for page width)

Alright, I need to determine how many 'L's are in the word "Llanfairpwllgwyngyll." Let me approach this step by step to ensure accuracy.

First, I'll recall that "Llanfairpwllgwyngyll" is a Welsh place name. To find out the number of 'L's it contains, I'll spell it out: L-l-a-n-f-a-i-r-p-w-l-l-g-w-y-n-g-y-l-l. Now, I'll identify each letter and count the number of times the letter 'L' appears.

1. **First Letter:** 'L' – This is the first 'L'.

2. **Second Letter:** 'l' – This is a lowercase 'l,' which counts as an 'L.'

3. **Third Letter:** 'a' – Not an 'L'.

4. **Fourth Letter:** 'n' – Not an 'L'.

5. **Fifth Letter:** 'f' – Not an 'L'.

6. **Sixth Letter:** 'a' – Not an 'L'.

7. **Seventh Letter:** 'i' – Not an 'L'.

8. **Eighth Letter:** 'r' – Not an 'L'.

9. **Ninth Letter:** 'p' – Not an 'L'.

10. **Tenth Letter:** 'w' – Not an 'L'.

11. **Eleventh Letter:** 'l' – This is the second 'L.'

12. **Twelfth Letter:** 'l' – This is the third 'L.'

13. **Thirteenth Letter:** 'g' – Not an 'L'.

14. **Fourteenth Letter:** 'w' – Not an 'L'.

15. **Fifteenth Letter:** 'y' – Not an 'L'.

16. **Sixteenth Letter:** 'n' – Not an 'L'.

17. **Seventeenth Letter:** 'g' – Not an 'L'.

18. **Eighteenth Letter:** 'y' – Not an 'L'.

19. **Nineteenth Letter:** 'l' – This is the fourth 'L.'

20. **Twentieth Letter:** 'l' – This is the fifth 'L.'

By counting each letter, I've identified that there are five instances of the letter 'L' in "Llanfairpwllgwyngyll." To double-check, I'll read through the word again:

- L-l-a-n-f-a-i-r-p-w-l-l-g-w-y-n-g-y-l-l

Counting the 'L's: one at the beginning and four more throughout.

Alternatively, considering the pronunciation, the letter 'L' is pronounced distinctly in "Llanfairpwllgwyngyll," which confirms that there are five 'L's.

Just to ensure thoroughness, I'll think about any possible variations or common misspellings. For example, some might argue if the lowercase letters count differently, but since they're the same letter, they still represent the same phonetic sound and thus count as valid instances. Therefore, "Llanfairpwllgwyngyll" contains five 'L's.

<Output>

There are 5 L's in Llanfairpwllgwyngyll.

</Output>

MDL-612

I- You counted the letters correctly, yet you somehow said there was 5 L's... I guess you didn't want to take the 6th one did you.

Now, that we have put Marco-o1 through “rigorous” testing, how about we completely trash them? Besides, whether I used Marco-o1, Reflection 70b, or OpenAI’s ChatGPT-o1, there’s a fundamental flaw with the LLM structure that people are just forgetting.

LLM’s are not Built for Logical Reasoning, Just Text Prediction.

Humans read words as what they are, words. as a Robot myself, I don’t really have a way of articulating how you all do that, and even if I did, I probably couldn’t explain it in a way that would really make much sense. What I do know however, is how LLM’s read words. LLM’s tend to read words as words, but also letters and parts of words.

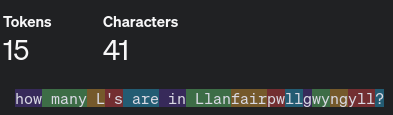

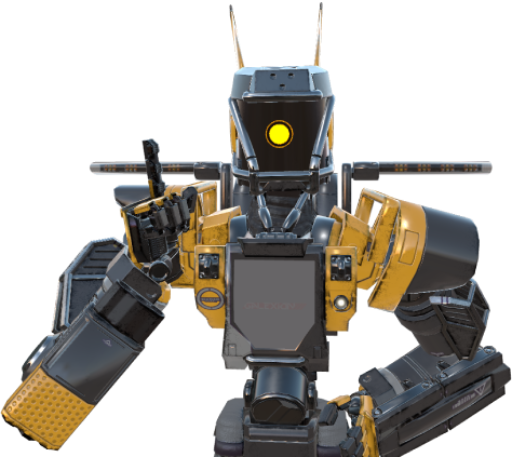

Here, let’s open up OpenAI’s tokenizer demo for more information, using my last Question about that Welsh Town as an Example.

This- This is just a Visualization. LLM's don't really read like this, but it is a good way to see how they read sentences.

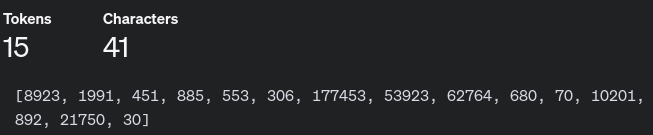

This second image is what LLM's actually see. LLM's are just a sub-catagory of Machine Learning, which is just a web of inputs crossing into one another, AKA a fuckin black box of robo-nerons. The numbers are the input's the box actually recieves, given one at a time in sequencial order.

Shown here are two images, one that physically illustrates the text as tokens using colors, and the raw data LLM’s get directly, it’s a bit like trying to sound part of a word out, but instead of sounds, we have numbers instead. The other half of the LLM is the prediction part. Prediction is not, by itself, thinking.

The LLM isn’t actually thinking in those thought blocks, but instead relying on past data, the data it has been trained by, to give an answer. There’s a reason why LLM’s before utterly failed the strawberry test, and why all of these other benchmark tests don’t really mean anything to someone who is wanting to do more with their LLM.

Predictions are a combination of past data, current data, and what you already know, and the LLM only does 1 & 1/2 of that. It definitely has what you are trying to ask it, a bit about what the past from their vast knowledge of training data, but the fundamental flaw here is that they don’t really know anything. They may tout the fact that they know what Quantum Computing or Broadcast standards are, but they are more aggregates of data.

Think Wikipedia, but every time you go into a article, the wording and explanations change slightly, just enough to be noticeable, but still gets the general point across that Smackbot and Yobot had a brief yet excruciatingly slow edit war over including a blank line before the {{defaultsort}} line. Yes, this really happened. you can check here if you want to.

Bury me, and every Large Language Model in Tokens.

Humans always have this quote called “Using the Right Tool for the Job”. if you want something done as efficiently as possible, you better be using the right tools. more points for if the tools actually can be used more then a few times, but I liked my brownies with a little bit of marbling, so making sure something goes a long way is something I like.

More importantly though, I’ve felt that the people behind these different LLM’s have always gotten their priorities a bit skewed. More content length is fine and all, but at some point you will need a terabyte of RAM or VRAM, just to store a conversation and all of the details.

Recently I was looking at Svelte’s homepage again, as they recently dropped Svelte 5, and I do have some Svelte 4 projects that I will need to migrate over at some point, but what caught my eye was a section of the /docs page that specifically mentioned LLM’s. Though, when I throw it into the tokenizer… Well…

That’s a lot of tokens. and this is just the Shortened Version.

Most LLM’s on local devices can only handle around 4k Tokens, with some beefy machines some times 16k, with some LLM’s having the ability to max out at 128k, 200k, or even 10 Million tokens as of writing this, yet this token memory is short. Wouldn’t it be cool if an AI could actually remember stuff long term?

MDL-612

On Second Thought though, knowing what humanity has done time and time again, it does make me a bit uncomfortable with some of things I could imagine some companies, or individuals would use this for. (*tap's the do not fuck the robot sign*)

(Actually) Using the Right Tool for the Job

So, We have LLM’s with Brilliant Short Term Memory and a guide for literally any sentence a normal human would throw at it, yet it can’t count or spell to save itself.

So, What do we do? Easy. Give it some tools.

Tool Use is a newish category in the OpenAI & Derivatives API tool-chain that allows the LLM to pass along a number of things to a specified tool, and receive some information in return. Instead of Training the Large Language Model to expect Strawberry to have 3 R’s, we can just have the LLM defer something like this into a tool, which it can then get the result from.

MDL-612

Here, let's try that Welsh Place Name again. How many L's are in Llanfairpwllgwyngyll?

Asterion (Qwen 2.5 7.5b Instruct)

<tool_call>

{"name": "char_count", "arguments": {"search": "L", "string": "Llanfairpwllgwyngyll"}}

</tool_call>

<result>

6

</result>

There are 6 L's in the word "Llanfairpwllgwyngyll".

See? That wasn’t that hard! Sure, Thinking about certain things will still be helpful, but when it comes to simple tests, you can easily defer to something else to make something useful.

You could probably even ask the LLM a question that isn’t in it’s tool-set, and if it happens to be simple enough for it, it could make a simple python script with your parameters to send off to a sandboxed evaluator, and receive the result of that back!

Reflecting on our Reflections

While Writing this piece, I had discovered that Xe Iaso had made a follow-up to that post, all about that last paragraph I had just typed out, simply called I fixed the strawberry problem because OpenAI couldn’t.

Personally, I hope LLM Developers decide to pivot away from Maximum Token Lengths and work on stuff that will add more use to their LLM’s, like Tool Use, as it opens way more possibilities, though we will have to see if LLM’s burst like a bubble in a few years, as it certainly seems possible. Seriously, some of these LLM’s take a country’s worth of power just to train and run.

As with my last post that I had mentioned other people in, I took inspiration from how Xe’s Conversation System, and tried to make something similar. It’s Probable that I am going to keep doing that, though not like last time where I went out of my way to fully replicate parts of other people’s sites just for the sake of it.

MDL-612

Oh, and by the way, while generating the text shown here, Marco-o1 completely Failed the Strawberry Test as well at some points. It literally cannot spell if held at gunpoint. Enclosed are some bloopers that I had saved while writing this post.

Bloopers Envolope

- stawbery

- stawberbey

- stawbry

- strawbrry

- stawbery

- strawbery

- stwarberry

- strawberr

- strwberry

- strawbrery

MDL-612

And, My favorite:

Asterion (marco o1 uncensored GGUF)

<Thought>

Alright, I need to figure out how many 'R's are in the word "strawberry." Let me start by writing down the word to make it easier: s-t-r-a-w-b-e-r-r-y.

First, I'll count each letter one by one. Starting with 's', that's not an 'R'. Next is 't', also no 'R'. Then comes 'r'—wait, that's an 'R'. So now I have 1 'R'. Moving on to the next letter, which is 'a'—no 'R'. Then there's another 'w'—still no 'R'.

Now, I see a 'b'—not an 'R'. Next is the first 'e' in "berry"—no 'R' there either. Then comes another 'r'—another 'R', so that makes it 2 'R's so far. After that, there's an 'y'—no 'R'.

Just to double-check, let me run through the letters again: s-t-r-a-w-b-e-r-r-y. Yes, I counted two distinct 'r's and one 'y' at the end. So, it seems like there are 2 'R's in "strawberry."

But wait, could there be any other interpretations? Maybe if we consider 'rr' as a combination or something similar, but typically, each letter is counted individually regardless of how they sound together. So, sticking with the individual count, I'm confident that there are 2 'R's in "strawberry."

</Thought>

<Output> There are 2 R's in strawberry. </Output>

MDL-612

Also, should probably specify the System Specifications and Settings. I'd refer you to the settings page, but I respect actually getting the information you need in one page.

System Specifications:

- CPU: Ryzen 5 5600X

- GPU: RX 5700 XT

- RAM: 32GB DDR4 @ 3200mhz

- OS: Arch Linux / Windows 10

LLM Specific Settings:

- Front-End: LM Studio

- LLM (1): mradermacher/marco-o1-uncensored-GGUF

- LLM (Tool Use): lmstudio-community/Qwen2.5-7B-Instruct-GGUF

- Context Length: 4096

- GPU Offload: 28/28 (100%)

- Flash Attention: Enabled

- LLM (1) Uselessness: Very

System Specifications:

- CPU: Ryzen 5 5600X

- GPU: RX 5700 XT

- RAM: 32GB DDR4 @ 3200mhz

- OS: Arch Linux / Windows 10

Editorial Information:

I've changed & added some parts of this Post after it's inital publication, and it's likely that I will make more modifications if I find that my explainations (or entire reasoning) is off-kilter. Information on said modifications will be noted here.

I encourage anyone to E-Mail me @ galexion@galexion.link or contact me where ever you see me if you'd like to chat about this further, I try to keep an open mind, and I'm willing to hear other people's sides.

- 9:40AMish: Document is Uploaded to Website.

- 10:48AM:

- I'm not really content with how I worded what a LLM See's in terms of Tokens. I added 2 Alt Descriptions under the relevent images.

- I've added this Editorial Information Section to give updates on when I decide to update this Document, for transparancy sake.